Building images from a standard Dockerfile typically relies upon interactive access to a Docker daemon, which requires root access on your machine to run. This can make it difficult to build container images in environments that can’t easily or securely expose their Docker daemons, such as Kubernetes clusters. In addition, Kubernetes is deprecating Docker as a container runtime after version 1.20. Docker as an underlying runtime is being deprecated in favor of runtimes that use the Container Runtime Interface (CRI) created for Kubernetes. Hence, an alternative such as Kaniko will continue to shine in the future.

Refrence:

What is Kaniko?

Kaniko is a tool to build container images from a Dockerfile, inside a container or Kubernetes cluster. Kaniko doesn’t depend on a Docker daemon and executes each command within a Dockerfile completely in userspace. This enables building container images in environments that can’t easily or securely run a Docker daemon, such as a standard Kubernetes cluster.

Since there’s no dependency on the daemon process, this can be run in any environment where the user doesn’t have root access like a Kubernetes cluster.

Moreover, Kaniko is very often used with Tekton , a Cloud-Native Solution for automative CI/CD pipelines. Kaniko may serve as one of the steps to handle the container packaging and publish workload. You may learn more about how to create a complete Tekton pipeline in the tutorial HERE .

How Kaniko works?

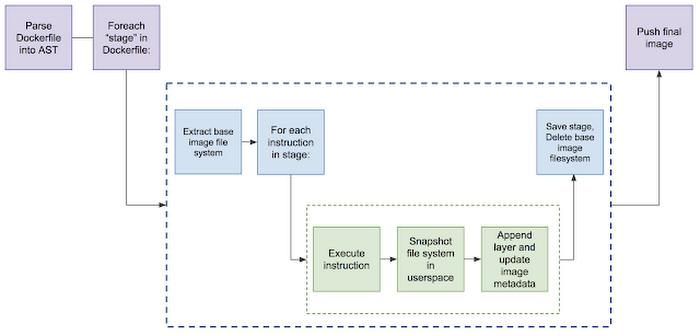

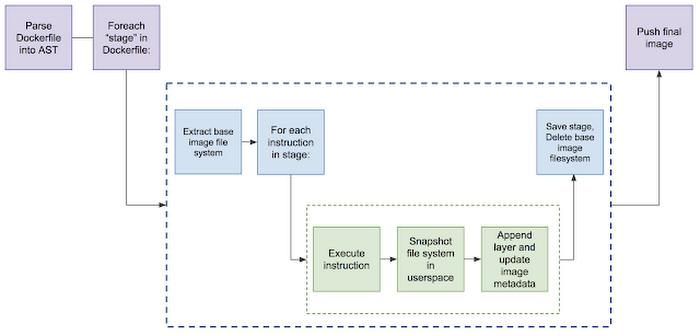

Kaniko executes each command within the Dockerfile completely in the userspace using an executor image: gcr.io/kaniko-project/executor which runs inside a container; for instance, a Kubernetes pod. It executes each command inside the Dockerfile in order and takes a snapshot of the file system after each command.

If there are changes to the file system, the executor takes a snapshot of the filesystem change as a “diff” layer and updates the image metadata.

You may learn more about the mechanism from HERE

Why Kaniko?

As explained at the beginning of the post, building container images with Docker might be the best and convenient way. However, there are many drawbacks. Most importantly, it involves Security Concerns.

First of all, you cannot run Docker inside a container without configuring the Docker Socket inside the container because the container environment is isolated from the local machine so that the Docker Engine inside the container cannot directly talk to Docker Client locally. Use the following YAML file as an example:

1---

2apiVersion: v1

3kind: Pod

4metadata:

5 name: docker

6spec:

7 containers:

8 - name: docker

9 image: docker

10 args: ["sleep", "10000"]

11 restartPolicy: Never

You will notice that an error will pop up during the build process. That is due to the reason that the docker container in the Kubernetes Cluster is not able to talk to the Docker Client locally via Docker Socket. Now let’s take a look at the modified YAML file with Docker Socket bound to the container:

1---

2apiVersion: v1

3kind: Pod

4metadata:

5 name: docker

6spec:

7 containers:

8 - name: docker

9 image: docker

10 args: ["sleep", "10000"]

11 volumeMounts:

12 - mountPath: /var/run/docker.sock

13 name: docker-socket

14 restartPolicy: Never

15 volumes:

16 - name: docker-socket

17 hostPath:

18 path: /var/run/docker.sock

This time, you will observe that the build process runs fine as you build the image locally with Docker Daemon. This time we are not using Docker inside the container instead we are using Docker on the node. However, this approach contains severe Security Concerns!

We mounted the socket that means that anybody capable of running containers in our cluster can issue any commands to Docker running on the node. Basically, it would be relatively easily straightforward to take control of the whole cluster, or even easily to take control of a specific node in the cluster wherever that container is running. Therefore, this is a huge security issue.

Morever, Docker is not supported anymore in Kubernetes version greater than 1.20 so you cannot have Docker as the default Container Runtime. Therefore, this approach will not work if there is no Docker on the nodes.

Now that we have discussed why building images with Docker might not be a good idea, let us talk about why Kaniko. Straightforwardly, Kaniko does not rely on Docker, and it can still use Dockerfile as a reference to build container images, which significantly reduces learning cost. Moreover, Kaniko runs as a Pod to handle the building workload, once it finishes, it will be terminated and will free all the allocated resources such as CPU and RAM utilization.

Demo

Let’s now start creating the configuration files required for running Kaniko in the Kubernetes cluster.

Step #1: Download the source code from my github repo .

Step #2: Create a secret for Remote Container Registry.

We will need to create a secret resource in the cluster for Kaniko to push the image to any Remote Container Registry. The following is a demo with DockerHub as the Remote Container Registry.

1### Export cedentials as environment variables

2

3export REGISTRY_SERVER=https://index.docker.io/v1/

4# Replace `[...]` with the registry username

5export REGISTRY_USER=[...]

6# Replace `[...]` with the registry password

7export REGISTRY_PASS=[...]

8# Replace `[...]` with the registry email

9export REGISTRY_EMAIL=[...]

10

11### Create the secret

12

13kubectl create secret \

14 docker-registry regcred \

15 --docker-server=$REGISTRY_SERVER \

16 --docker-username=$REGISTRY_USER \

17 --docker-password=$REGISTRY_PASS \

18 --docker-email=$REGISTRY_EMAIL

You may find more information about how to create the secret tailed to container registry Here .

Step #3: Prepare the configuration

Now that the secret is created, we may deploy Kaniko.

1---

2apiVersion: v1

3kind: Pod

4metadata:

5 name: kaniko

6 labels:

7 app: kaniko-executor

8spec:

9 containers:

10 - name: kaniko

11 image: gcr.io/kaniko-project/executor:debug

12 args:

13 - --dockerfile=Dockerfile

14 - --context=git://github.com/miooochi/kaniko-demo.git

15 - --destination=hikariai/kaniko-demo:1.0.0

16 volumeMounts:

17 - name: kaniko-secret

18 mountPath: /kaniko/.docker

19 restartPolicy: Never

20 volumes:

21 - name: kaniko-secret

22 secret:

23 secretName: regcred

24 items:

25 - key: .dockerconfigjson

26 path: config.json

Notes:

--dockerfile is where you specify the Dockerfile path--context is where you specify the code remote repository--destination is where you specify the remote image registry

Building Images Using Kaniko

Apply the configuration

1kubectl apply -f kaniko-git.yaml

Check out the logs

1# kev @ archlinux in ~/workspace/kaniko-demo on git:master x [14:23:18]

2$ kubectl logs kaniko --follow

3Enumerating objects: 40, done.

4Counting objects: 100% (40/40), done.

5Compressing objects: 100% (26/26), done.

6Total 40 (delta 19), reused 25 (delta 9), pack-reused 0

7

8INFO[0010] Resolved base name golang:1.15 to dev

9INFO[0010] Resolved base name golang:1.15 to build

10INFO[0010] Resolved base name alpine to runtime

11INFO[0010] Retrieving image manifest golang:1.15

12INFO[0010] Retrieving image golang:1.15 from registry index.docker.io

13INFO[0015] Retrieving image manifest golang:1.15

14INFO[0015] Returning cached image manifest

15INFO[0015] Retrieving image manifest alpine

16INFO[0015] Retrieving image alpine from registry index.docker.io

17INFO[0019] Built cross stage deps: map[1:[/app/app]]

18INFO[0019] Retrieving image manifest golang:1.15

19INFO[0019] Returning cached image manifest

20INFO[0019] Executing 0 build triggers

21INFO[0019] Skipping unpacking as no commands require it.

22INFO[0019] WORKDIR /workspace

23INFO[0019] cmd: workdir

24INFO[0019] Changed working directory to /workspace

25INFO[0019] No files changed in this command, skipping snapshotting.

26INFO[0019] Deleting filesystem...

27INFO[0019] Retrieving image manifest golang:1.15

28INFO[0019] Returning cached image manifest

29INFO[0019] Executing 0 build triggers

30INFO[0019] Unpacking rootfs as cmd COPY ./app/* /app/ requires it.

31INFO[0044] WORKDIR /app

32INFO[0044] cmd: workdir

33INFO[0044] Changed working directory to /app

34INFO[0044] Creating directory /app

35INFO[0044] Taking snapshot of files...

36INFO[0044] Resolving srcs [app/*]...

37INFO[0044] COPY ./app/* /app/

38INFO[0044] Resolving srcs [app/*]...

39INFO[0044] Taking snapshot of files...

40INFO[0044] RUN go build -o app

41INFO[0044] Taking snapshot of full filesystem...

42INFO[0047] cmd: /bin/sh

43INFO[0047] args: [-c go build -o app]

44INFO[0047] Running: [/bin/sh -c go build -o app]

45INFO[0048] Taking snapshot of full filesystem...

46INFO[0049] Saving file app/app for later use

47INFO[0049] Deleting filesystem...

48INFO[0049] Retrieving image manifest alpine

49INFO[0049] Returning cached image manifest

50INFO[0049] Executing 0 build triggers

51INFO[0049] Unpacking rootfs as cmd COPY --from=build /app/app / requires it.

52INFO[0052] COPY --from=build /app/app /

53INFO[0052] Taking snapshot of files...

54INFO[0052] CMD ./app

55INFO[0052] Pushing image to hikariai/kaniko-demo:1.0.0

56INFO[0059] Pushed image to 1 destinations

Finally, check if the container image has already been successfully pushed to the remote container registry.

Conclusion

In this post, we have walked through at a basic introduction to Kaniko. We’ve seen how it can be used to build an image and also setting up a Kubernetes cluster with the required configuration for Kaniko to run.

To sum up, Kaniko is an open-source tool for building container images from a Dockerfile even without privileged root access. With kaniko, we both build an image from a Dockerfile and push it to a registry. Since it doesn’t require any special privileges or permissions, you can run kaniko in a standard Kubernetes cluster, Google Kubernetes Engine, or in any environment that can’t have access to privileges or a Docker daemon.